Training and Inference

A key purpose of collecting labeled data is to use it for training and validation of ML models which can then be used to automatically infer or classify information when users receive new data. These tasks generate or consume a significant amount of data that needs to be stored and managed efficiently. ApertureDB can serve as a source for your training / validation data and destination for any new metadata derived from inference. It integrates with popular ML frameworks and libraries such as PyTorch and TensorFlow.

General How-to

ApertureDB supports mixed media types such as images, videos, text, audio, documents. This means it can serve as a storage system for ground truth data throughout all stages of the ML workflow including:

-

Labeling and Annotation: ApertureDB natively recognizes different types of annotations such as labels, bounding boxes, polygons, clips, supporting ML tasks like classification, object detection, or object identification. See Labeling Workflows for more information about labeling integrations.

-

Rapid Prototyping: ApertureDB provides a robust, powerful, and efficient query language that to filter data. This allows you to form ad hoc subsets of your dataset for rapid experimentation. Data from various dataset repositories can be colocated in ApertureDB and then filtered as appropriate, for example into train, validation, and test datasets.

-

Dataset Preparation: Built-in transformations for images and videos such as cropping, resizing, flipping, rotating, thresholding, and clip extraction not only allow the data to be adapted to the specific needs of the model, but also support the process of data augmentation, on-the-fly, without requiring unnecessary copies. Augmenting data on the server-side has another advantage of being faster due to proximity to the data, and often results in a significant reduction (60%-6X) in bandwidth requirements since the most common operations involve sampling data down.

-

Training and Testing: ApertureDB can act as a dataset generator for your machine learning framework of choice. Data can be filtered and augmented into train, validation, and test datasets. ApertureDB's batching and pipelining capabilities enable large scale, parallel training and inference runs, efficiently. Platforms like Vertex AI also integrate seamlessly with ApertureDB for higly-scalable workflows.

-

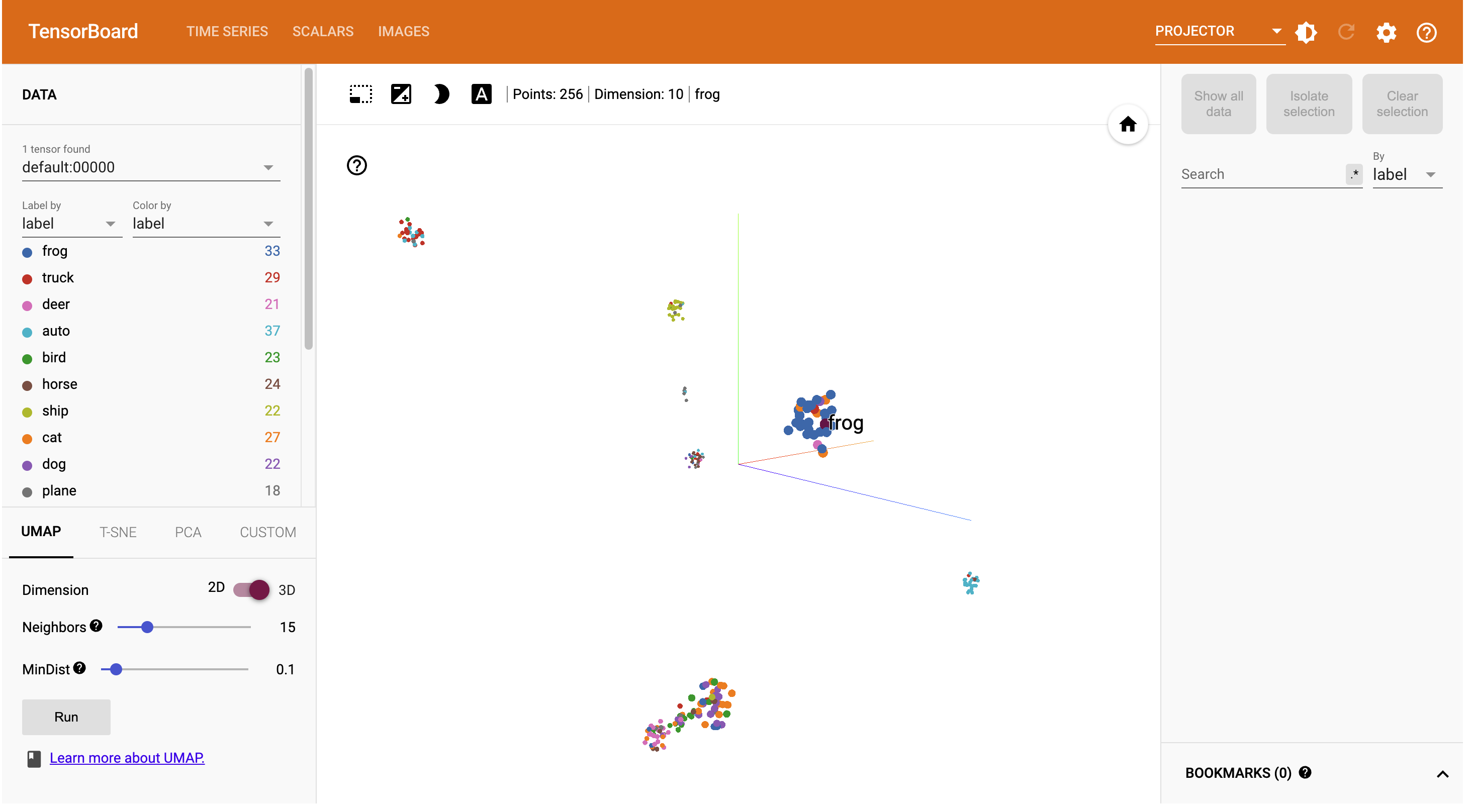

Inference: ApertureDB's fast and scalable vector lookup feature allows for efficient matching of descriptors or embeddings found during inference with labeled examples. This means that the embedding model can be used to infer classifications for new images.

-

Enhance: It is extremely straightforward to store back results of inference pipelines such as new classifications, annotations, or embeddings into ApertureDB making them available for downstream query and analytics.

Integration with PyTorch

As part of our Python SDK, we provide classes that can be used to integrate ApertureDB with PyTorch.

PyTorchData is a wrapper for Datasets retrieved from PyTorch datasets. With the construction of an appropriate query, it can be used to load a Dataset into ApertureDB. See CocoDataPyTorch.py for an example of how this can be done.

ApertureDBDataset provides a general framework for driving PyTorch training and inference with a dataset stored in ApertureDB. See pytorch_classification.py for an example of how to use it for image classification.

Further examples and more information about integrating PyTorch with ApertureDB is available:

Integration with TensorFlow

As part of our Python SDK, we provide classes that can be used to integrate ApertureDB with TensorFlow.

TensorFlowData is a wrapper for Datasets retrieved from Tensorflow datasets. With the construction of an appropriate query, it can be used to load a Dataset into ApertureDB. See Cifar10DataTensorFlow for an example of using it to load an image Dataset.

Prerequisites

See Setup Guide for more information:

- An active ApertureDB instance

- ApertureDB client package available for pip install

- PyTorch or TensorFlow installed

Please reach out to us (team@aperturedata.io) for more details or to discuss other frameworks.